Concept Map

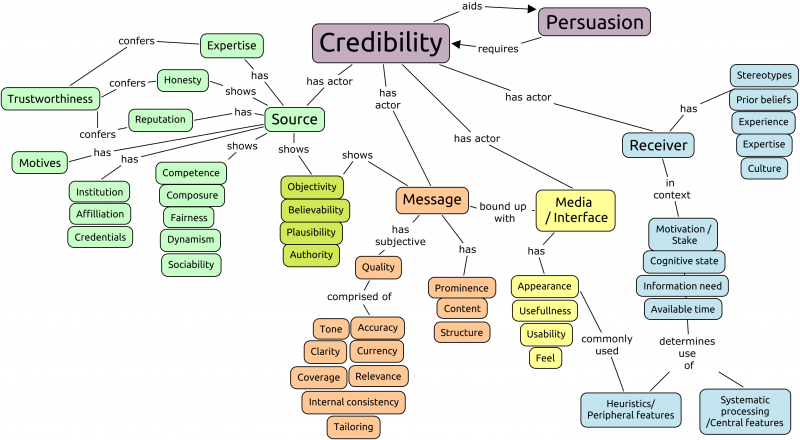

This is an attempt to bring together research relating to credibility on the web. The bibliography will hopefully expand over time and I will organise the papers into groups according to theme. Concepts and definitions of credibility are many and various, but I hope this concept map captures most of the key features:

Based largely on reviews by Hilligoss & Rieh (2008), Metzger(2007) and Wathen & Burkell (2002) in bibliography. Concept map created with CmapTools

Much of the earlier work into credibility focussed on web pages and there are a smaller number of studies which have assessed social contibutions to collaboratively edited sites. I have also found fewer so far that focus on the impact of particular interface features and use of separate heuristics as opposed to overall ‘page-level’ assessments - these finer-grained details are interesting and important.

Bibliography

HILLIGOSS, B. and RIEH, S.Y., 2008. Developing a unifying framework of credibility assessment: Construct, heuristics, and interaction in context. Information Processing & Management, 44(4), pp. 1467-1484

http://www.sciencedirect.com/science/article/pii/S0306457307002038

System/Topic: Information seeking generally

Method: Grounded theory. 24 undergrads, kept a diary to record one information seeking task a day for ten days. Followed up with in-depth interview.

Findings: Defined three levels of credibility judgement: Construct (personal definition of credibility including believability, truthfulness and objectivity), heuristic (general rules of thumb) and interaction (specific sources and content cues - including corroboration when they lacked prior knowledge)

Noteworthy: Importance of endorsement-based heuristics (p1477); use of a range of source types in the study; going beyond simple credibility definitions / dimensions

Keywords: concepts, heuristics.

HU, Y. and SHYAM SUNDAR, S., 2010. Effects of Online Health Sources on Credibility and Behavioral Intentions. Communication Research, 37(1), pp. 105-132

http://crx.sagepub.com/content/37/1/105.abstract

System/Topic: Web-based health information

Method: Assessed behavioural intentions of students (N=500) based on seeing screenshots of the same information framed as a blog, home page, forum or web site. Also gathered ratings of message attributes and the extent of perceived information gatekeeping and source expertise.

Findings: Source of information was significant in credibility judgements. Blogs and discussion forums seen to be more complete. Perceived level of editorial and moderator gatekeeping was signifcant.

Noteworthy: Need for more nuanced understanding of source layering and interaction. Importance of collective (forums, sites) over individual gatekeeping (blogs, homepages).

IDING, M., CROSBY, M., AUERNHEIMER, B. and BARBARA KLEMM, E., 2009. Web site credibility: Why do people believe what they believe? Instructional Science, 37(1), pp. 43-63

http://dx.doi.org/10.1007/s11251-008-9080-7

System/Topic: Web-based information

Method: Content analysis of student assessments and student ratings of sites related to their courses.

Findings: Information content quoted as most important, though name recognition of the source was also significant. Look and feel also important. Vested interests of sources - education tended to be treated positively, commercial interests negatively. Corroboration important in assessing site inaccuracies.

Noteworthy: Persuasiveness of personal testimony appearing on a page (p58); recommendations for web evaluation training - including teaching corroboration skills.

JIANU, R. and LAIDLAW, D., 2012. An evaluation of how small user interface changes can improve scientists’ analytic strategies, Proceedings of the SIGCHI Conference on Human Factors in Computing Systems 2012, ACM, pp. 2953-2962 http://doi.acm.org/10.1145/2207676.2208704

System/Topic: Scientific analysis interfaces / visual analytics Method: Students solving protein interaction tasks with an experimental online interface. One group given amended interfaces between sessions to add “nudges” toward better use of the interface, simultaneous consideration of evidence (to avoid over reliance on working memory) and more evidence gathering for hypotheses. Findings: Significantly more hypothesis and evidence gathering and hypothesis switching observed in the test conditions. No evidence of confirmation bias but conjunction bias and single attribute analysis demonstrated. Noteworthy: Study well informed by cognitive theory and attempted to mimic a real world task and minipulate the interface for more “normative” outcomes.

Keywords: analytic biases; persuasive technology; visual analytics.

METZGER, M.J., 2007. Making sense of credibility on the Web: Models for evaluating online information and recommendations for future research. Journal of the American Society for Information Science and Technology, 58(13), pp. 2078-2091

http://dx.doi.org/10.1002/asi.20672

System/Topic: Web-based information

Method: Review of own/others studies

Findings: Primacy of presentation and design features in credibility judgements. Scope and accuracy also most often used in assessments by users. Identity and authority less so. Verifying author’s qualification rarely done- though most important dimension for credibility. Credibility judgements are situational - so consideration of the receiver’s state is important. Need for a dual-process oriented model- distinguish peripheral/heuristic from central/systematic assessments.

Noteworthy: Peoples reported behaviour different from their actual behaviour when observed (fewer cues used in practice) - hence a greater need for direct observation; need for simpler checklists or heuristics that users can more easily apply;.

METZGER, M.J., FLANAGIN, A.J. and MEDDERS, R.B., 2010. Social and Heuristic Approaches to Credibility Evaluation Online. Journal of Communication, 60(3), pp. 413-439

http://dx.doi.org/10.1111/j.1460-2466.2010.01488.x

System/Topic: Online information seeking

Method: Focus groups with range of ages and abilities

Findings: Use of social cues (testimonials, ratings) widely used as credibility clues, though a balance of reviews more trusted than when they are all positive. Social confirmation of opinion important. Trust placed in the endorsements of “enthusiasts” - particularly active users. Set of heuristics at play in source and message credibility judgements: reputation, endorsement (bandwagon), consistency (corroboration), expectation violation, persuasive intent.

Noteworthy: Stresses the importance of social cues and arbitration, unlike many previous credibility studies. Notes new model of bottom-up authority enabled by the social web.

NÉMERY, A., BRANGIER, E. and KOPP, S., 2011. First Validation of Persuasive Criteria for Designing and Evaluating the Social Influence of User Interfaces: Justification of a Guideline. 6770, pp. 616-624

http://dx.doi.org/10.1007/978-3-642-21708-1_69

System/Topic: HCI expert consultation

Method: Development of an evaluation grid for persuasive interfaces incorporating static (crediblity, privacy, personalisation, attractiveness) and dynamic (Solicitation, priming, commitment, ascendancy-addition) features. Validation of grid with HCI experts - used to evaluate 15 sample interfaces.

Findings: Claims high level of correct identification of persuasive features and hence expert agreement.

Noteworthy: Attempt to provide a standard tool and framework for evaluation of persuasiveness of interfaces - “persuasive strength”. No real ethical judgement on persuasion, but largely construed positively. Criteria for the credibility dimension were that it should be possible to identify the reliability, expertise level and trustworthiness of the information.

SUNDAR, S.S., KNOBLOCH-WESTERWICK, S. and HASTALL, M.R., 2007. News cues: Information scent and cognitive heuristics. Journal of the American Society for Information Science and Technology, 58(3), pp. 366-378

http://dx.doi.org/10.1002/asi.20511

System/Topic: Online news (Google News)

Method: Online assessment of undergraduates (500+) who rated items as newsworthy, credible and worth clicking on. Experiment manipulated the sources, freshness and number of related sources to assess their impact.

Findings: Credibility of the source was overriding factor in assessments, though freshness and related articles became important with less immediately credible sources. Interestingly, number of related sources was bipolar - either a high or low number made the story more attractive.

Noteworthy: Claims for the perceived objectivity of news aggregators, because they are completely automated hence seen by some to be neutral - evidence quoted for this in the introduction. Dual process theory and cognitive heuristics in relevance judgements.

Keywords: cues, heuristics.

SUNDAR, S.S., XU, Q. and OELDORF-HIRSCH, A., 2009. Authority vs. peer: how interface cues influence users ACM, pp. 4231-4236 http://doi.acm.org/10.1145/1520340.1520645

System/Topic: product reviews and purchase intention Method: Students and the public viewed camera product pages with Editor’s choice “seals” from authoritative or non-authoritative sources and user reviews (majority negative or majority positive) to tradeoff bandwagon and authority heuristics Findings: Bandwagon (positive reviews) were found to be more powerful than the authority of the seal. Authority cues came more into play when bandwagon cues were contradictory Noteworthy: Authors suggest adaptation of interface to user’s level of interest/involvement ( as high involvement should mean less use made of social cues)

Keywords: psychology; user interfaces; web design.

WATHEN, C.N. and BURKELL, J., 2002. Believe it or not: Factors influencing credibility on the Web. Journal of the American Society for Information Science and Technology, 53(2), pp. 134-144

http://dx.doi.org/10.1002/asi.10016

System/Topic: Credibility generally but focusses on online health info.

Method: Review

Findings: A range of credibility dimensions and criteria identified in different studies. Notes that most focus on source and message credibility and there is less emphasis on the receiver. Also, that studies dont often differentiate message and medium.Noteworthy: Discussion of Tseng and Fogg (1999)’s gullability error or “blind faith” (typical of novices) and incredulity error or “blind skepticism” (typical of experts); conflict between credibility attributes (MDs rated as credible for health information on the web despite having least reader-friendly messages).

Keywords: review, concepts.

WESTERMAN, D., SPENCE, P.R. and VAN DER HEIDE, B., 2012. A social network as information: The effect of system generated reports of connectedness on credibility on Twitter. Computers in Human Behavior, 28(1), pp. 199-206

http://www.sciencedirect.com/science/article/pii/S0747563211001944

System/Topic: Twitter users as sources (of H1N1 information)Method: 281 participants shown mock Twitter profiles, manipulating follower numbers and follower/following ratios. User credibility ratings and bahvioural intention indicators.Findings: Overall follower count not correlated with perceived competence of source (though medium follower numbers slightly more trustworthy).A narrow gap between follower and followed count perceived as more competent.Noteworthy: A proposed “Goldilocks” heuristic that middle ground follower and followed counts are most trustwothy. Links made to Social Information Processing Theory (SIPT), though notes that a shorter interaction history may be needed on the social web than that theory suggsts.

Keywords: Social media; News; Online credibility; System-generated cues; Computer-mediated communication.